Trusting the Machine

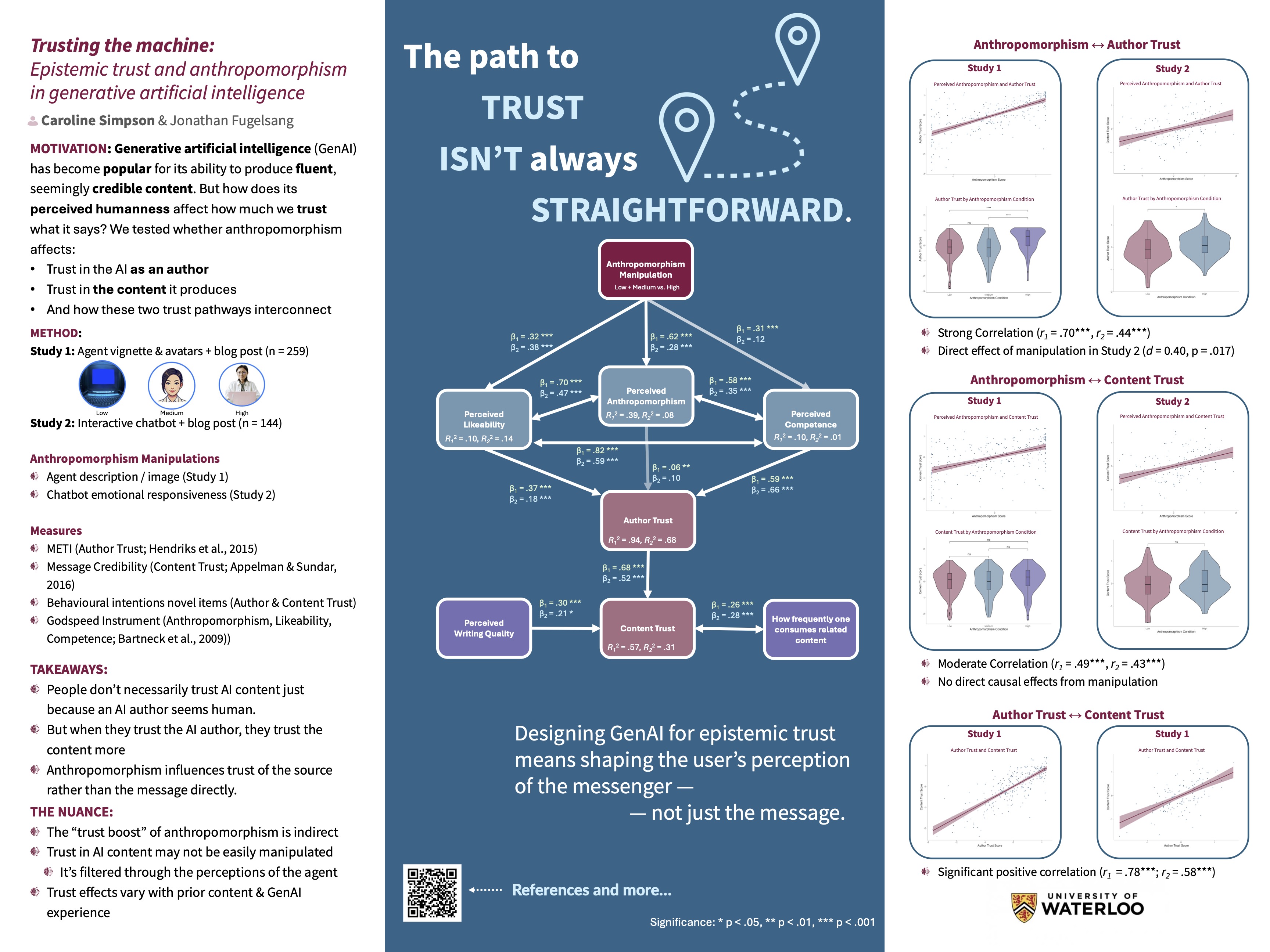

Trusting the machine: Epistemic trust and anthropomorphism in generative AI

Abstract

As artificial intelligence (AI) becomes increasingly prevalent in content creation, understanding how trust in AI-generated information is influenced by its perceived humanness is critical. This study investigates the effect of anthropomorphism on trust of the information generated by generative AI (GenAI) within the context of science communication in two experiments. We hypothesized that higher levels of anthropomorphism would be associated with greater trust of the AI-generated content and the AI author. In Experiment 1, a between-subjects experimental design was employed, where 259 participants were randomly assigned to one of three anthropomorphism conditions. Participants read a description of an AI agent, varying in degree of anthropomorphism by condition, and a blog post attributed to that agent. Trust in the content and the AI author was measured using questionnaires. Results showed a significant, moderate positive correlation between anthropomorphism and content trust (r(257) = 0.48, p < .001). In contrast, anthropomorphism had a much stronger positive correlation with author trust (r(257) = 0.69, p < .001). The correlations between anthropomorphism and author trust (r(142) = 0.44, p < .001) and anthropomorphism and content trust (r(142) = 0.43, p < .001) were replicated in a follow-up experiment of 144 participants where participants interacted with the AI author rather than reading a description. Additionally, a positive effect of anthropomorphism was evident on author trust (t(139.89) = -2.43, p = .016, d = -0.40), but not on content trust (t(141.80) = -1.14, p = .256, d = -0.19). These findings suggest that anthropomorphic design influences trust in GenAI as an author and to a lesser extent, trust in the content it produces.

Additional Post Hoc Results

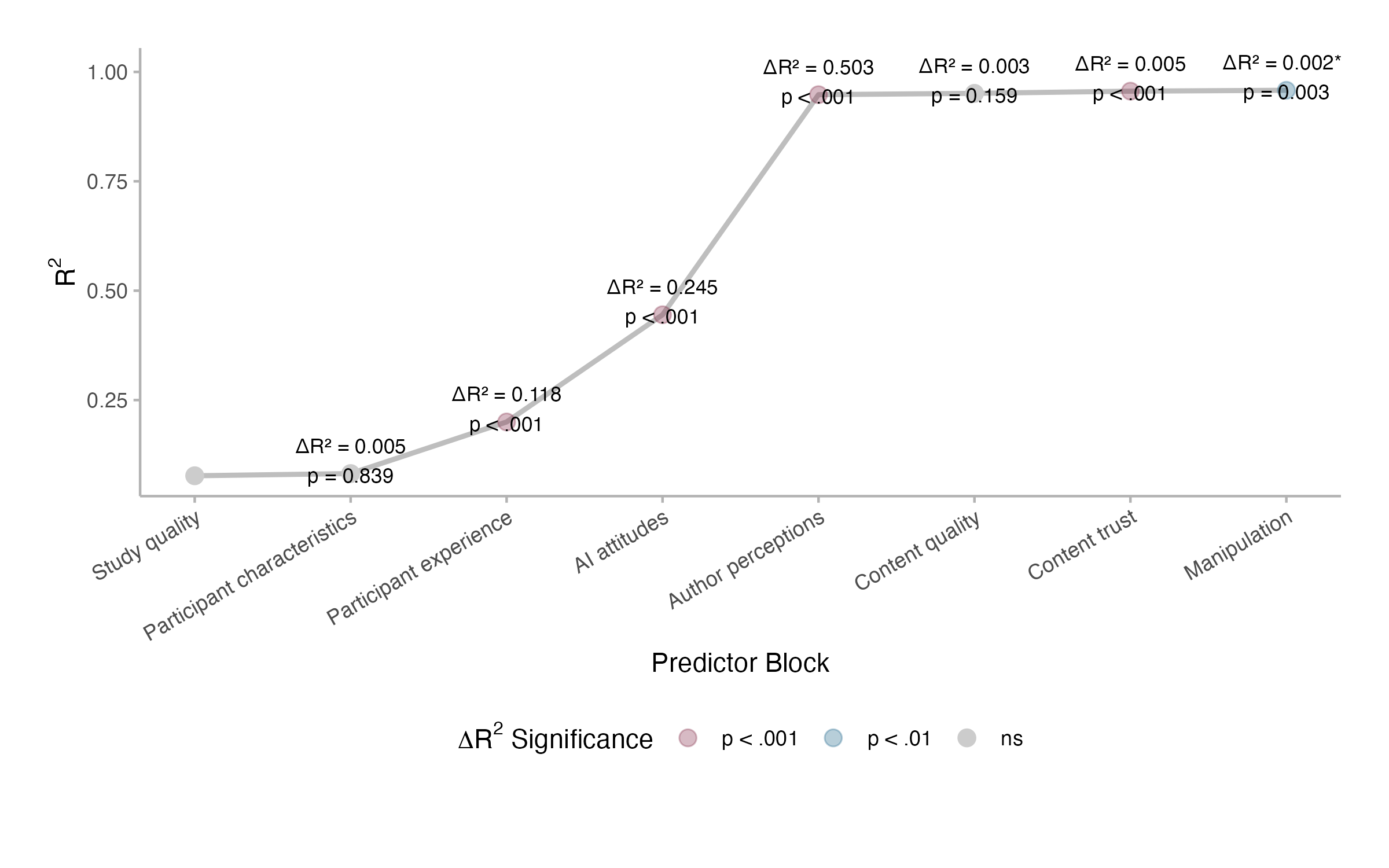

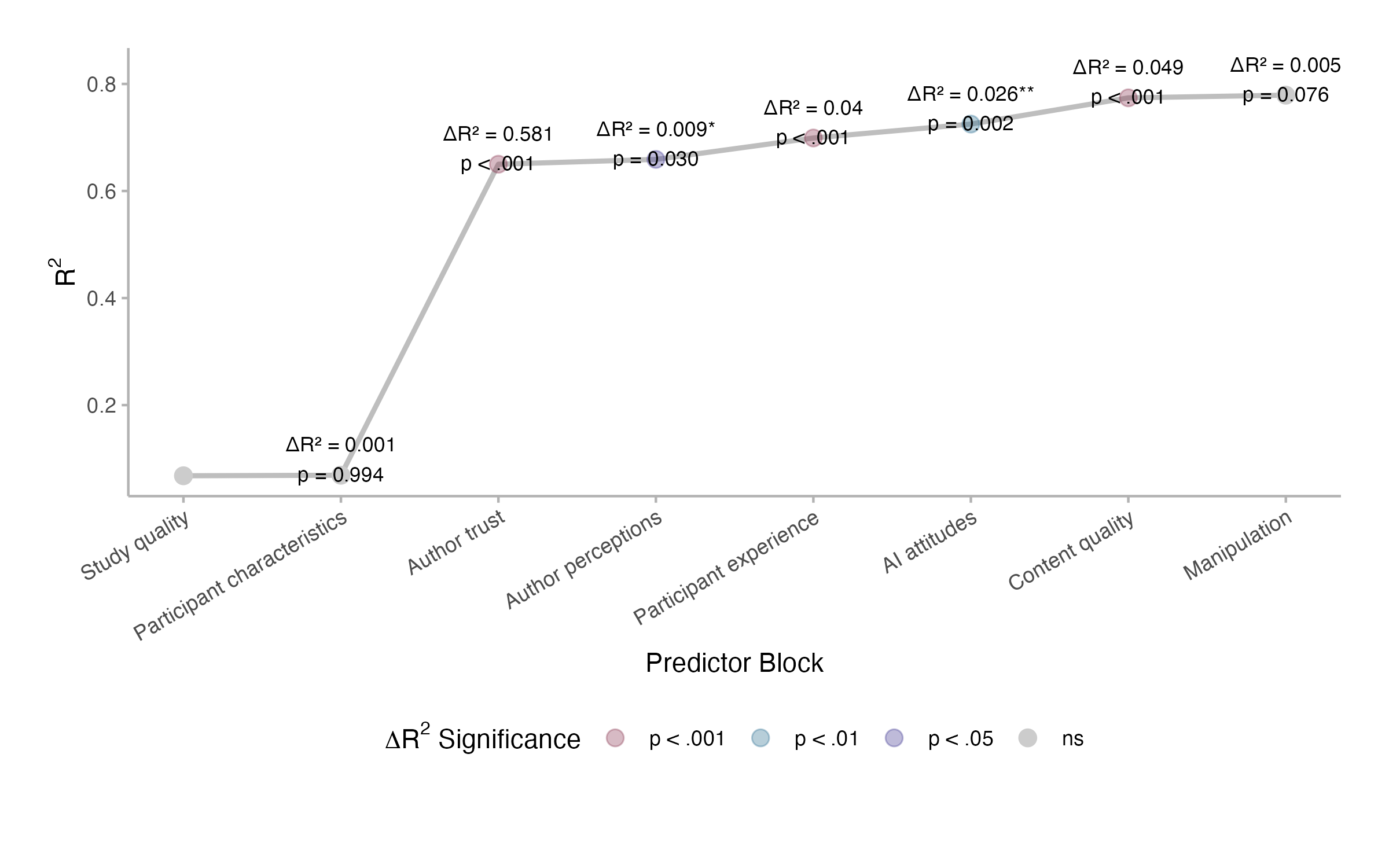

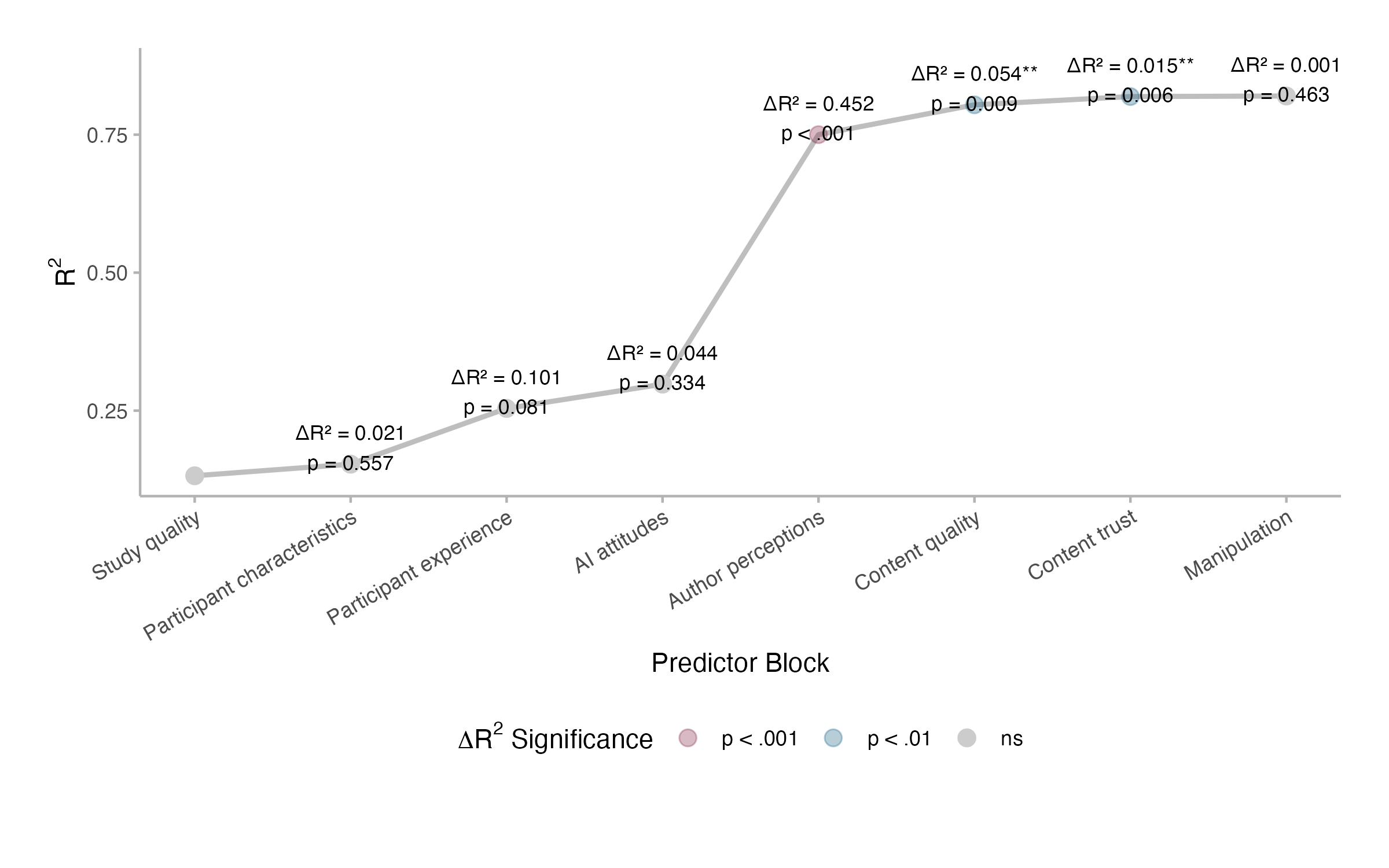

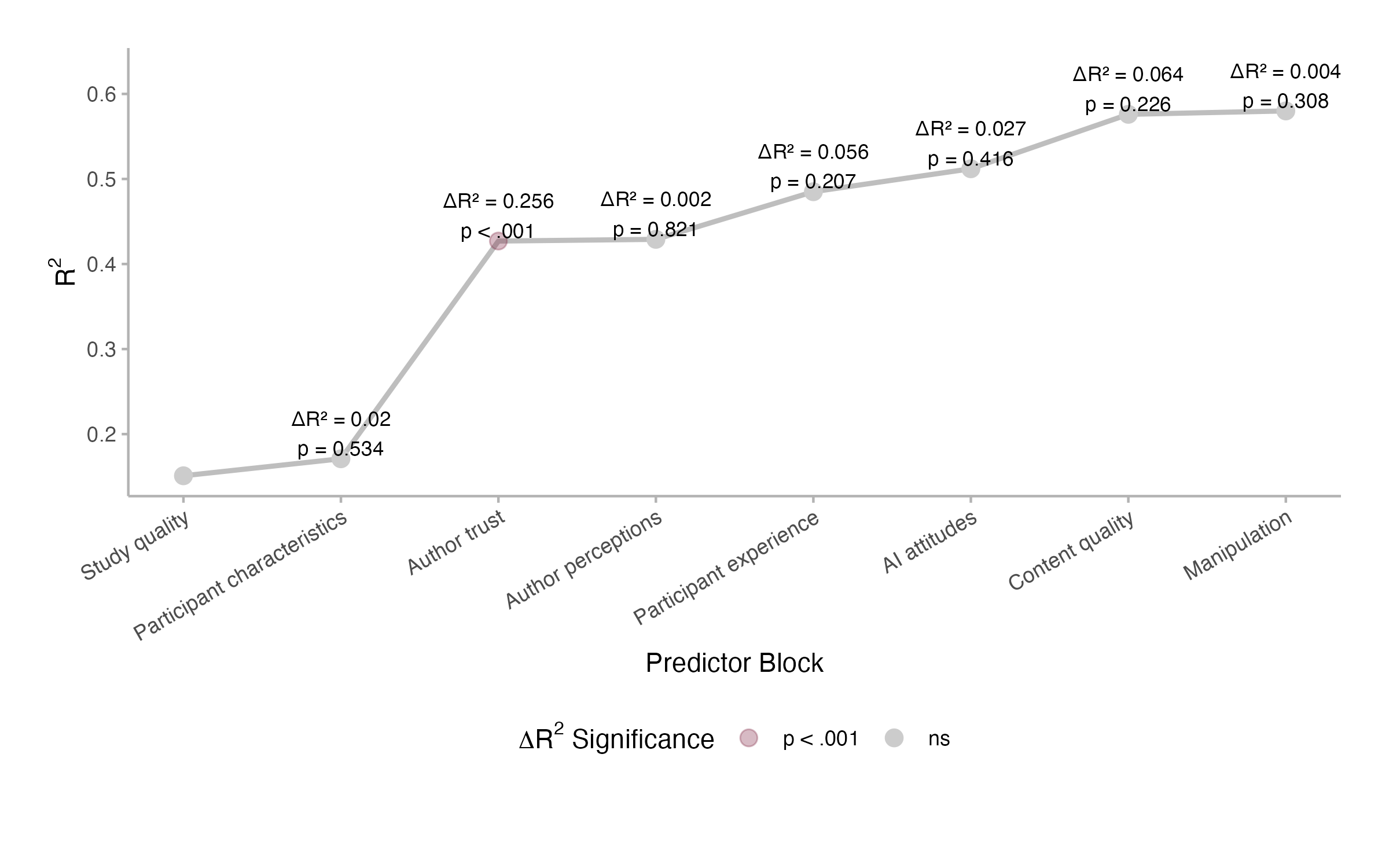

As part of my analysis I also ran a hierarchical regression analysis to help identify what things were contributing to trust, here are the breakdowns.

Study 1

Author trust

Content trust

Study 2

Author trust

Content trust

References

Appelman, A., & Sundar, S. S. (2016). Measuring message credibility: Construction and validation of an exclusive scale. Journalism & Mass Communication Quarterly, 93(1), 59–79. https://doi.org/10.1177/1077699015606057

Bartneck, C., Kulić, D., Croft, E., & Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. International Journal of Social Robotics, 1(1), 71–81. https://doi.org/10.1007/s12369-008-0001-3

Hendriks, F., Kienhues, D., & Bromme, R. (2015). Measuring laypeople’s trust in experts in a digital age: The Muenster epistemic trustworthiness inventory (METI). PLOS ONE, 10(10), e0139309. https://doi.org/10.1371/journal.pone.0139309